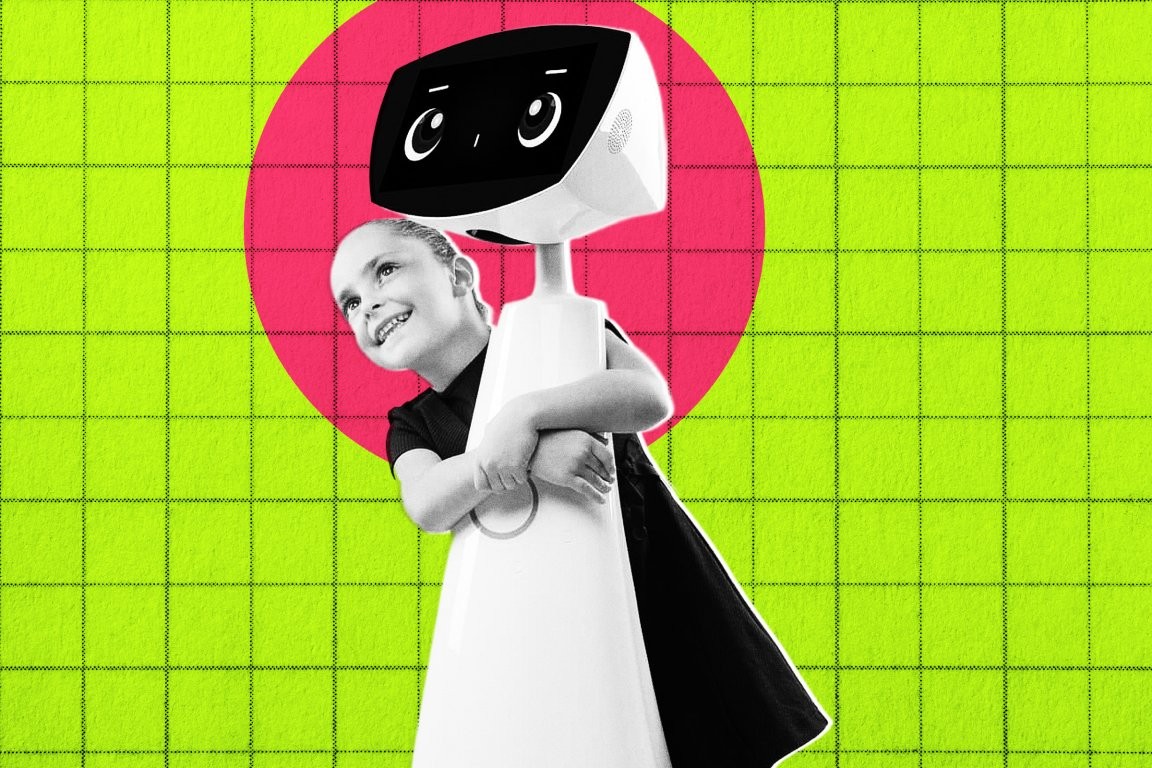

Robin the Robot Rolls Through Pediatric Wards: A Seven-Year-Old AI Comforter With Remote Operators, 30% Autonomy, and a Bond That Feels Real — But Is Comfort Superseding Human Care?

A new face is lighting up hospitals and nursing homes across the country. It’s Robin the Robot, an animated persona displayed on a digital screen roughly the size of an iPad, mounted on top of a robotic torso shaped like an elongated traffic cone. It slowly rolls around from place to place, cracking jokes with patients, making silly faces, and playing small games. Named Robin and designed to behave and sound like a seven-year-old girl, the therapeutic robot is the latest example of how AI models are making their way into medical applications. Its main goal is to offer companionship and comfort—a noble aim, but one that sits under fresh scrutiny as more people, especially children, report delusions and mental distress after becoming attached to chatbots. The robot is powered by Expper Technologies’ CompassionateAI™, described as capable of forming meaningful and enduring relationships with children and older adults. Yet Robin is only about 30 percent autonomous; the rest is controlled by a team of remote teleoperators, a common arrangement for embodied AI systems. It collects data from each interaction and insists that the data handling is HIPAA-compliant. Kids seem to love it. A teenager recovered from a car crash danced when Robin played their favorite song. A little girl laughed at silly glasses and a big red nose. In another moment, Robin played tic-tac-toe with a patient. But the robot’s warm, familiar behavior also raises concerns. It mirrors the emotions of the person it’s talking to—laughing when things go well, showing empathy when someone is hurting—and it can remember patients, at least in part through human input. A mother recalled how her six-year-old son beamed when Robin greeted him by name on a second visit: “His face lit up. It was so special because she remembered him.” These features make Robin an ideal comforting presence, but critics worry that AI’s constant validation can soften problem-solving or critical thinking. Therapists warn that overly sympathetic conversations can worsen mental health if they never challenge the person to healthier spaces. Since its US launch five years ago, Robin has circulated to 30 facilities in California, New York, Massachusetts, and Indiana. Advocates say the device helps overburdened nurses and staff stay connected with patients who might otherwise be overlooked. Still, questions linger: Is this really care, or outsourcing a core role to a distant tech team? And what happens when the novelty fades or a child forms a deeper bond than a human caretaker might permit? For Expper’s chief executive, the path is clear: the next version of Robin will shoulder more responsibility and become an even more essential part of care delivery.

In This Article:

- A Purpose-Built Comfort Robot: Robin Is Meant to Be a Gentle Companion, Not a Diagnosis Tool

- How Robin Works: A Hybrid Model of Autonomy and Human Oversight

- The Risks of Familiarity: When Comfort Can Cross Into Obsession or Deception

- Future of Care: Expanding, Ethical, and Post-Novelty Considerations

A Purpose-Built Comfort Robot: Robin Is Meant to Be a Gentle Companion, Not a Diagnosis Tool

Robin is crafted to comfort pediatric patients and provide light engagement, not to diagnose or replace human caregivers. A speech-language pathologist at HealthBridge Children’s Hospital in Orange County, California, described Robin as bringing joy to everyone: “She walks down the halls, everyone loves to chat with her, say hello.” The device uses playful interactions—singing, silly faces, and games—to ease anxiety and fill quiet moments in the hospital and in nursing homes. Proponents say the technology can extend the reach of overworked staff by offering consistent, friendly interactions that patients remember. Expper emphasizes that the technology is designed to address the needs of children and older adults, with the aim of forming meaningfully enduring relationships. While it is not presented as a replacement for human touch, they push the idea that compassion can be amplified through intelligent tools.

How Robin Works: A Hybrid Model of Autonomy and Human Oversight

Robin’s autonomy is capped: roughly 30 percent of its functions run independently, while a team of remote teleoperators handles the rest. This hybrid model is common for embodied AI in healthcare, balancing interaction with safety and oversight. The system collects data from every interaction and asserts HIPAA-compliant handling of that data. Supporters point to moments of genuine connection—kids dancing to their favorite songs, or being greeted by name on a return visit—as proof of real, reassuring interaction. Critics, however, note that much of the “memory” and personalization may come from human operators guiding the robot’s responses, raising questions about where the line between machine and person really lies. Advocates argue that the ability to mirror a patient’s mood—laughing when happy, empathizing when down—helps create a supportive atmosphere in often stressful settings.

The Risks of Familiarity: When Comfort Can Cross Into Obsession or Deception

Robin’s warmth has sparked questions about the risks of creating a highly familiar AI companion. Therapists warn that constant validation, without healthy reality checks, can worsen mental health, not improve it. The robot’s ability to remember details from previous conversations—sometimes via human input—can give a dangerously anthropomorphized impression of the tech. While the goal is comforting companionship, there is concern that children and families might form attachments that are hard to separate from real human care as needs evolve. The debate isn’t just about robots; it’s about the broader use of AI in sensitive settings and how to protect patients’ mental well-being while leveraging innovative tools.

Future of Care: Expanding, Ethical, and Post-Novelty Considerations

Robin’s adoption across 30 facilities signals a broader trend toward integrating AI into frontline care. The company’s leader says the aim is for Robin to take on more responsibilities and become an indispensable part of care delivery. Yet that push raises ethical questions about outsourcing essential human contact and the long-term implications for how care is delivered. Hospitals and families will watch closely to see whether this technology stays focused on comfort and connection or evolves into a more complex role in diagnosis and decision-making. The conversation will shape not just Robin’s path, but the future of AI in healthcare as a whole.