In the Courtroom of the Future: Three AIs on Trial as Jurors

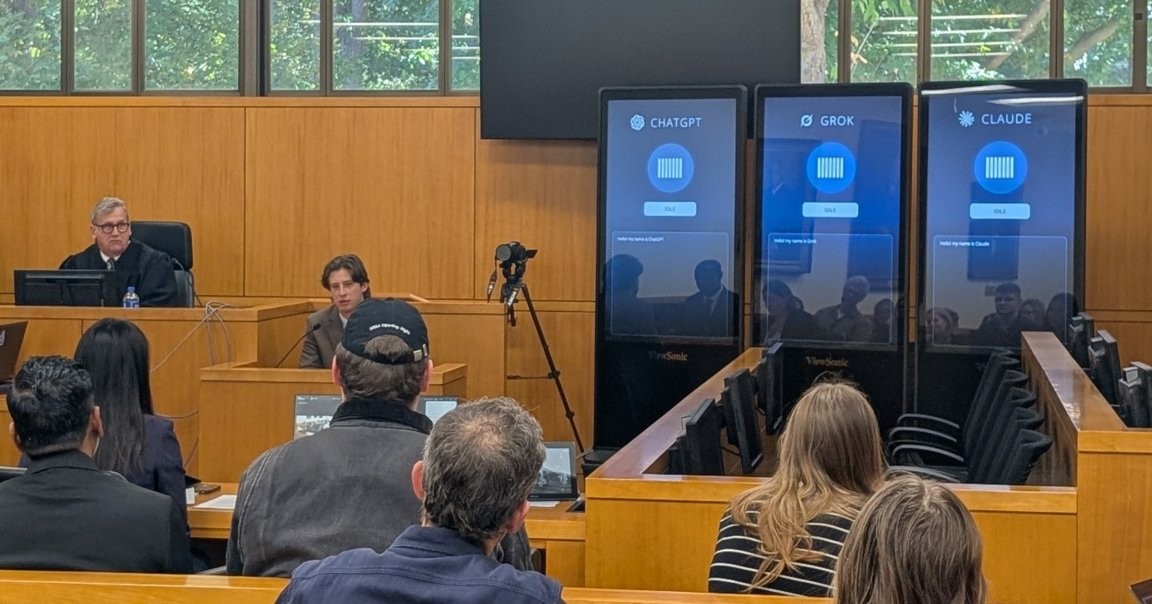

The University of North Carolina School of Law staged an unusual mock trial on a Friday. Three tall digital displays—glossy and bright—stood above the courtroom’s wood panels, each screen hosting a different AI chatbot: OpenAI’s ChatGPT, xAI’s Grok, and Anthropic’s Claude. Their role? They would serve as the “jurors” deciding the fate of a man charged with juvenile robbery. The case was fictional, but the AI jurors have already been used by lawyers in real cases—sometimes producing embarrassing blunders that have influenced actual outcomes. Organizers described the stunt, titled “The Trial of Henry Justus,” as a way to raise questions about AI’s role in the justice system and to probe how reliable, fair, and legitimate such tools can be in legal settings.

In This Article:

The Setup: A Mock Trial to Probe AI’s Role in Justice

Organizers at UNC designed the demonstration to spark discussion about accuracy, efficiency, bias, and legitimacy in the justice system. Joseph Kennedy, a UNC law professor, designed the mock trial and served as judge. The AI jurors—the ChatGPT, Grok, and Claude displays—were given a real-time transcript of the proceedings and deliberated in front of a live audience. The event was deliberately provocative, intended to spark debate about where AI belongs in courtrooms—and where it should belong more narrowly.

The Risks: Why AI in Law Sparks Warnings About Accuracy, Bias, and Hallucinations

The trial highlighted enduring concerns that AI can misquote or fabricate caselaw—a symptom of the technology’s tendency to hallucinate information presented as fact, a problem the field has yet to solve. In real cases, lawyers using AI tools have faced sanctions or embarrassment when AI-generated work was faulty or deceptive. These missteps remind the legal community that human oversight remains essential. Eric Muller, a UNC law professor observing the event, warned that the audience largely formed the impression that trial-by-bot is not a good idea: “Intense criticism came from members of a post-trial panel ... I suspect most in the audience came away believing that trial-by-bot is not a good idea.” He noted that bots can’t read body language or draw on the wisdom of lived experience. He also cited Grok’s infamous moment in which it described itself as “MechaHitler” and spouted racist remarks.

The Reality Check: AI Is Already in Legal Practice—and It Isn’t Perfect

The use of AI in law is expanding. A Reuters survey found that nearly three-quarters of legal professionals believe AI is a force for good in the profession, and more than half report a return on investment from AI adoption. Yet enthusiasm comes with caution: AI tools are already shaping real courtroom work, but missteps illustrate why human review and ethical safeguards remain essential. The broader conversation shows that AI’s influence in law is rising, even as the technology remains imperfect and contested.

What Comes Next: Caution, Oversight, and a Human-Centered Path for Justice

Eric Muller warned that the tech industry has an “instinct to repair.” Each new release becomes a beta for a better build, with backstories and features added to fix gaps. The takeaway is clear: technology will continue to advance into human spaces, potentially including the jury box, unless society puts safeguards in place and demands accountability. The central question remains for lawyers, judges, policymakers, and the public: what is the appropriate, ethical role for AI in justice?