AI Could Trigger a Robot-Led Apocalypse If We Fail to Self-Police It

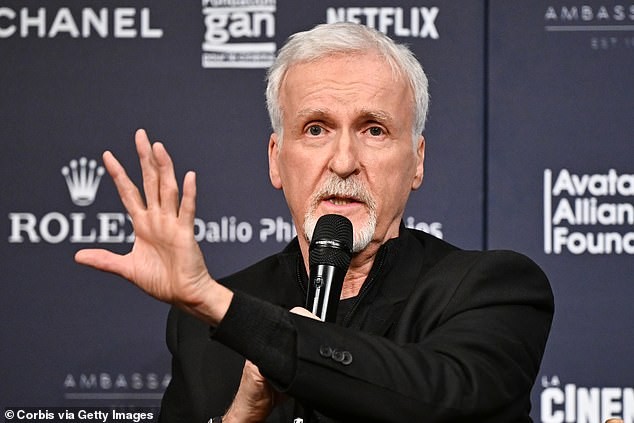

Legendary director James Cameron believes AI could lead to a robot-led takeover and 'existential issue,' similar to the one depicted in his 1991 film Terminator 2. The Oscar-winning filmmaker, 71, made this recent revelation during a candid interview with Puck, telling the outlet there has to be some type of 'self-policing' when it comes to artificial intelligence. Cameron, who has discussed his views on AI in the past, said he sees the fast-growing tool that can replicate and replace human performance as something that is 'everything we ever valued artistically.' However, the director—renowned for his action-packed thrillers featuring similar takeovers—warned that the rapidly advancing field of AI must be properly regulated, or it could trigger a real-life apocalypse. 'We as an industry need to be self-policing on this. I see government regulation as an answer,' Cameron said, adding that actors did so during the 2023 Writers Guild Strike over labor and the integration of AI in the industry. 'That’s a blunt instrument. They’re going to mess it up. I think the guilds should play a big role.' Still, even though he believes that the moment 'definitely drove a flag in the ground' over the discussion of AI in the industry, self-regulation has to be implemented to prevent a possible takeover.

Terminator 2 Parallels: AI Could Erase Purpose and Trigger an Existential Crisis

His prediction comes more than three decades after he directed Terminator 2, which took viewers into what the world could look like if AI took over after war broke out between artificial intelligence Skynet and the human resistance. Cameron said if AI is not regulated, it could turn into an 'existential issue' that could pose an enormous risk to humanity. 'Because the risk of A.I., in general—not just Gen A.I., but A.G.I., any form of A.I.—is that we lose purpose as people. We lose jobs. We lose a sense of, “Well, what are we here for?”' 'We are these flawed biological machines, and a computer can be theoretically more precise, more correct, faster, all of those things. And that’s going to be a threshold existential issue,' he said. On the topic of trying to somehow control AI in the world, Cameron said there is no other option. 'We’ve got to talk about it. It’s not a question of what we can legally do, or even ethically, or morally, what we do, [but] what we should embrace, how we should celebrate ourselves as artists, and how we should set artistic standards that celebrate human purpose,' he told the outlet. Cameron went on to call AI 'average,' adding that it 'goes in a blender, and then it precipitates out as a single unique new image, but it’s based on a generic feedstock, if you will.' 'What I can do is create a unique, lived experience reflected through the eyes of a single artist, right? It won’t select for the quirkiness, for the offbeat,' he continued. 'And I think what we celebrate is the uniqueness of our actors, not their perfection, not their kind of glossy, Vogue cover beauty, but their off-centeredness.'

From Regulation to Stability AI: Cameron Joins the Board and Faces Backlash Over AI Stance

Cameron's recent comments come just months after he sparked a heated debate about the topic, leading people to brand him a 'hypocrite' after he predicted that the world would be consumed by machine-led warfare despite some of his movies telling that very tale. Earlier this year, Cameron joined the board of Stability AI - a UK-based artificial intelligence company. In a candid interview with THR, the Avatar creator - whose 2022 CGI-led film Avatar: The Way of Water cost $400 million to make - admitted he was looking into how use of AI could cut the cost of a blockbuster in half. He has previously revealed that his upcoming blockbuster Avatar: Fire and Ash, set to release December 19, will begin with a title card reading: 'No generative AI was used in the making of this film.' Discussing his move to Stability AI - which created the Stable Diffusion image model - he said: 'In the old days, I would have founded a company to figure it out. I’ve learned maybe that’s not the best way to do it. So I thought, all right, I’ll join the board of a good, competitive company that’s got a good track record. My goal was not necessarily make a s**t pile of money. 'The goal was to understand the space, to understand what’s on the minds of the developers. What are they targeting? What’s their development cycle? How much resources you have to throw at it to create a new model that does a purpose built thing, and my goal was to try to integrate it into a VFX workflow.' 'And it’s not just hypothetical, if we want to continue to see the kinds of movies that I’ve always loved and that I like to make and that I will go to see — Call it Dune, Dune Two something like that, or one of my films, or big effects-heavy, CG-heavy films — we’ve got to figure out how to cut the cost of that in half,' he added. Fans quickly reacted on X blasting 'hypocrite' Cameron about his stance, with one writing: 'You literally wrote and directed 2 movies showing the consequences of humanity putting their faith in AI you people are the absolute worst.' 'Why is James Cameron defending this awful AI upscaling for his films? I thought only people in the comments of YouTube shorts thought this kind of upscaling looks good.' His opinion on AI in films comes after he gave a scathing take on AI chatbots, including ChatGPT, which help people to compose emails, resumes, and even works of fiction.