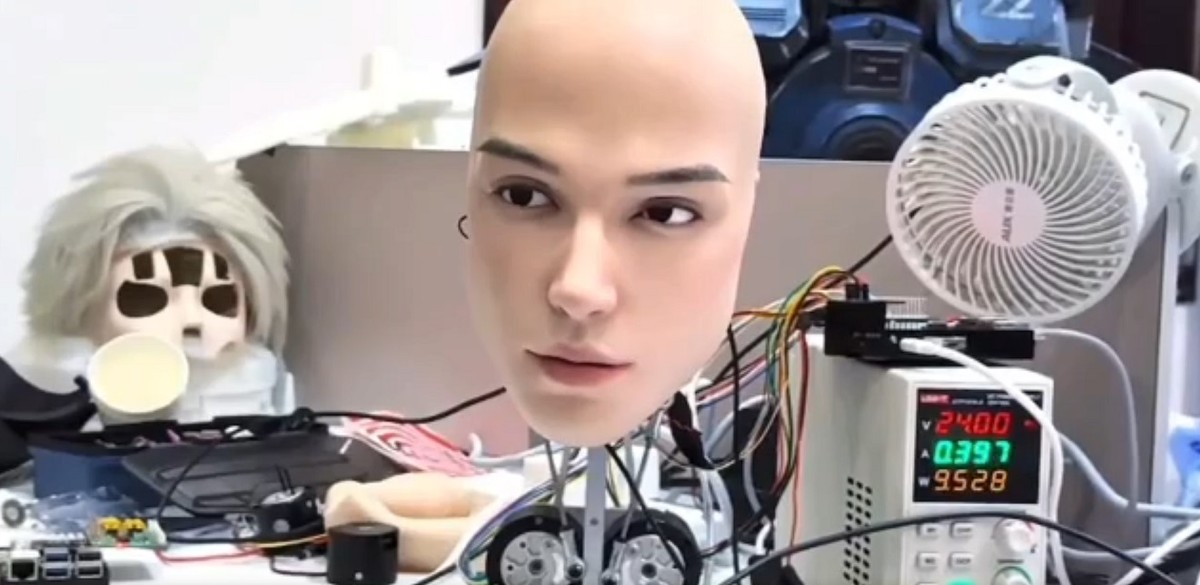

A Robot Face That Feels Human: 25 Micro Motors Bring Blink-Perfect Expressions to Life

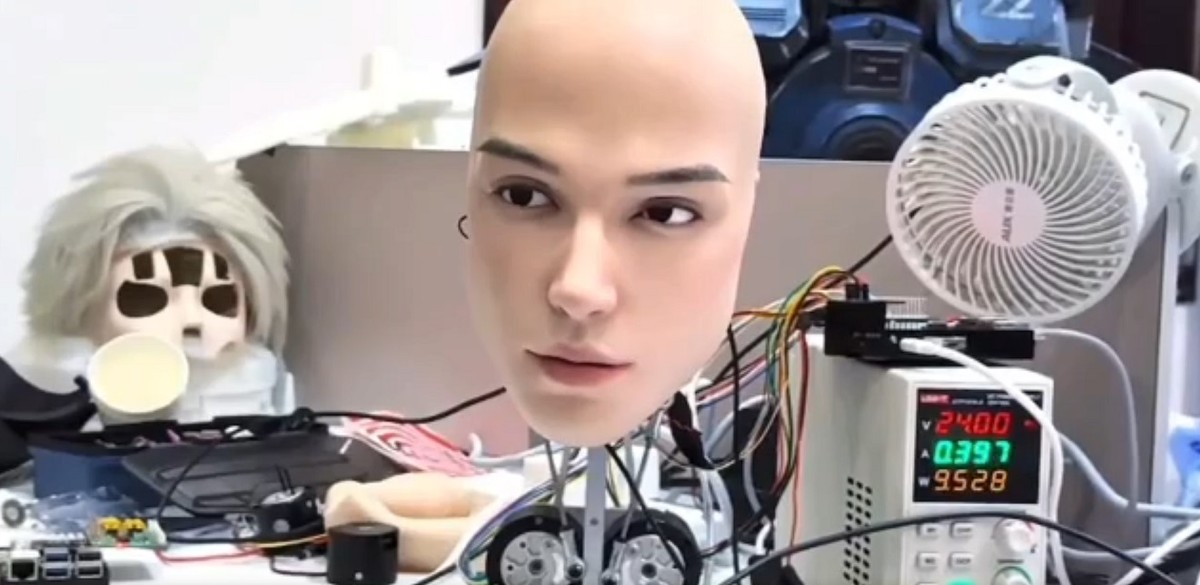

AheadForm has unveiled a highly realistic robotic facial interface called Origin M1. It’s designed to reproduce subtle human emotions in real time. The system uses 25 miniature motors to create almost invisible muscle movements, including blinking and eye shifts. That level of detail can make observers treat the robot as a real conversation partner. The development signals a sharper edge in the ongoing blend of human and AI interactions.

In This Article:

Inside the Craft: How Origin M1 Moves Like a Real Face

The design relies on 25 tiny motors to reproduce facial micro-expressions. By coordinating these movements with lifelike timing, the system achieves the impression of a living presence. Observers may feel drawn into the conversation as the robot's facial dynamics respond to cues.

Eyes that See: Sensors in the Artificial Pupils

AheadForm integrated sensors and cameras directly within the artificial pupils. This allows the robot to visually recognize its surroundings. The audio system, with built-in microphones and speakers, enables real-time, natural-sounding dialogue.

A Universal Platform: Compatibility and Potential Uses

Origin M1 is described as a universal product compatible with various base robot systems. That flexibility could accelerate deployment across service, education, or research robots. But it also raises questions about how people distinguish real emotion from machine-driven simulation—and what that means for trust.

What This Means for Our Future

The line between human and machine emotion is blurring as technology grows more lifelike. We may see more natural interactions with robots that can understand and respond to us in real time. As always, responsible deployment and transparent communication will be essential to navigate this new normal.